I bundled together 18 of my digital products costing a total of $417. Buy the Fallible Ideas Bundle for $100.

Biases David Deutsch Taught Me

I spent around 10 years learning a lot with David Deutsch (DD), and then the next 10 years not interacting with him much. And he mostly stopped putting out public content, so I moved on to engaging with other thinkers. With distance and with greater familiarity with other thinkers and ideas, I’ve reflected on what biases my mentor had and how they were passed on to me and affected me. This is my retrospective from my point of view.

Politics

DD liked politics. He read political news and talked about it a lot. Since I met DD in 2001, the most consistent, organized, serious content creation he ever did was writing around 500 posts for his political blog in around four years. Why didn’t he write articles about philosophy, physics or parenting? Why did he chose politics? I don’t know. I think he was wrong. Being overly interested in current politics is a common error I see with many people who ought to spend more attention and time elsewhere. This error affected me, more in the past, and still a little recently.

Was promoting the Iraq war really a cause DD needed to focus on? If he was just saying everything he thought (which is closer to what I did), that’d be more understandable. But he wasn’t. He was choosing his battles … and he chose the Iraq war as a major one to argue about.

DD didn’t talk about political philosophy or economics much. He wasn’t trying to teach people useful background that would enable them to judge political issues better for themselves. (He did some of that teaching privately with me, and some publicly, but not enough compared to how much he discussed political news.) He frequently spoke about current events and the latest political news. Why? Why is that the best thing to focus on? I think he was wrong. His interests were biased away from stuff with lasting importance or areas where he had the most valuable expertise.

I think being overly focused on current political news generally makes people’s lives worse, and it’s a common problem which I’ve contributed to some. I’ve criticized it, warned people against it, and taken steps to move away from it myself.

Israel

DD likes Israel and defends it in political debates. He often blogged about it. He called it a shining beacon of morality, or some words very similar to that. I agree with him. I didn’t initially. He was fighting against the mainstream media and I didn’t know much about it. But he convinced me pretty quickly. And he kept talking about it on a regular basis, year after year. Why? I think he’s basically right about the topic, and it’s a reasonably important issue, but why not spend that energy teaching me more epistemology? Or writing publicly to refute induction in a better, more organized and persuasive way? Or making videos doing commentary on his or Popper’s books?

How big a place Israel occupies in DD’s mind is a bias which he taught to me. He kept bringing Israel up and made it have an outsized role in our conversations (and on his political blog).

Learning a lot about Israel and how the media treats it unfairly had some value for me (e.g. it helped with understanding how biased and dishonest the mainstream media is), but I don’t think it was the most optimal area to spend that much attention on and write blog posts telling others about.

I don’t think I’ve debated or blogged about Israel for years, so maybe I’ve gotten over this bias.

Polyamory

DD likes polyamory as a concept or abstract theory. Why pay so much attention to it when he wasn’t living that kind of life himself? I don’t know. Maybe because Sarah was into it. Regardless, he got me to pay attention to poly, too, and learn about the subject. Was that useful to me? Not especially. It’s OK. There are many interesting things in the world. Poly was an interesting topic to think about. But was it the best place my attention could have gone? I don’t think so.

Even if polyamory is a theoretically good idea – which I have some substantial doubts about – is it the best use of energy? If you are going to go against your culture on 1-5 major issues in your life, should it be on the list, or are there other things which are a higher priority? I’ll grant that romantic relationships could easily be included on a top 5 important areas list. But the main goal that’s worth the effort should be to avoid disaster (since chronic fighting, broken hearts and divorces are common), not to be poly – which is uncommon, risky and hard. Poly generally doesn’t have major benefits even if it works. And if you have a disaster doing poly, you’ll have a harder time than with a conventional disaster because most people will understand your problem less and be less helpful, sympathetic or supportive.

DD’s and Sarah’s Autonomy Respecting Relationships (ARR) said monogamy is the main problem in people’s relationships. They thought coercion causes all the trouble in both parenting and relationships because all irrationality comes from coercion. And they seemed to think that monogamy was the main source of coercion in romantic relationships. They suggested that if you get rid of the rules and restrictions in parenting or relationships, then people will almost automatically be rational and happy. I disagree.

I’ve been concerned that the poly ideas would hurt people – and that it’d be partly my fault – so I’ve written some stuff and done some podcasts to warn people against it.

Part of why poly can hurt people is mixing it with “rationalism”. If you think you’re super rational and right about everything, and you say monogamous people are attacking autonomy, then you can end up pressuring people to be poly or else you’ll judge them as irrational. I didn’t intentionally pressure people, but some people may have taken it that way, and this kind of theme comes up in multiple rationality-oriented poly communities, e.g. at Less Wrong too. The Less Wrong poly stuff has been a huge disaster that hurt people (I was never involved with that at all; I just read about it online). There are other poly communities that are more like “it works for me; I just wanna do my choice and be left alone not stigmatized” and don’t criticize most people for not being poly, which is less pressuring. ARR told people monogamy was irrational and that not being poly was limiting the growth of knowledge as well as basically opposing freedom and trying to be a jealous coercer, so that was really pressuring.

Discussion

Overrating discussion for learning is a bias DD didn’t have himself (maybe), but passed on to me. I overestimated the availability and value of discussion due to having so much access to valuable discussions with DD. I got used to that and expected it to continue basically forever. I didn’t expect DD to quit discussion. And I expected to find other very smart who were interested in unbounded rational discussion, which is something DD told me was way more realistic than I now think it actually is. Consequently, I overestimated how much emphasis other people should put on discussion in their own lives. And I became overly reliant on discussion myself. Discussion is genuinely important and I’ve given some good reasons and arguments about it, but I also overestimated it.

I also overrated the long term value of discussions with other people who were less awesome than DD. I had lots of discussions that were good at the time, but having more, similar discussions lost value over time as they got more repetitive. I wanted to move on to some more advanced discussions, but it was hard to find discussion partners willing to pursue topics past the more accessible starting points. I didn’t foresee that problem.

I don’t think DD had the same issue as me, but he had some related issues. Most of what he’s written in his life has been discussion replies. He wrote two books in 20+ years and wrote a handful of articles. But he wrote thousands of TCS posts and thousands of other discussion forum posts, and he wrote multiple books worth of private IMs and emails to me. I think he has some perfectionist tendencies that make it hard for him to finish anything for a formal, serious or organized context. It’s easier for him to write for informal, disorganized, incomplete discussion than writing anything for publication or with higher standards or expectations.

DD did write thousands of posts at discussion forums, primarily email lists, but I don’t think he was personally biased in favor of discussion in the same way as me. In most of the posts, he was telling other people his ideas but wasn’t trying to interactively learn from or with them. And he was using the format to excuse imperfections, disorganization and incompleteness, whereas I was treating it more seriously. I actually thought critical discussions were a great way of doing truth seeking.

It was hard, but I’ve put a lot of work into moving away from discussion so that it’s more of an optional bonus for me instead of something central to my philosophy work. Over the last few years, I did a lot of unshared philosophy writing that wasn’t discussion based: it wasn’t prompted by other people’s questions or anything else they said.

Productive discussions require more skill than I realized. My and DD’s communities had a pro-discussion bias because we didn’t recognize how hard it is for people to discuss productively. Interestingly, I think many people also underestimate the skill needed for productive discussion but then reach a different conclusion: an anti-discussion bias. They notice that they don’t get much value from their discussions, so they conclude that discussion isn’t very valuable. They don’t realize how much their discussions could be improved with better discussion methods and skills.

David Deutsch’s Denial

This is part of a series of posts explaining the harassment against me which has been going on for years. The harassment is coming from David Deutsch and his community. I’ve tried to address the problem privately but they refused to attempt any private problem solving.

Justin emailed David Deutsch (DD) to ask him to respond to the Andy B harassment and to write a tweet asking DD’s fans to stop harassing. DD replied and it’s the only thing he’s said about the whole situation, as far as I know, so I’m analyzing it. I already analyzed how DD lied. Now I’m focusing on a different section (source):

I don't know this Andy B he [Elliot] speaks of. I'm not aware of anyone I know sending DDoS attacks or anything else covertly to Elliot. I'm not the chief of anything.

DD’s comments don’t respond to the claims at issue or to what’s being asked of him. What’s going on?

Straw Man

DD’s words look like a straw man reply. The claims at issue include:

- Andy harassed Elliot Temple (ET) and FI (ET’s community).

- One or more of DD’s community members DDoSed ET.

- DD’s associates know Andy (e.g. they follow him on Twitter and publicly talk with him there) and encourage Andy’s harassing actions.

- Andy and other harassers are DD’s fans, who have said they’re standing up for DD against DD’s enemy (ET).

- Something as simple as a tweet from DD might actually discourage the harassment.

- DD has a fan community who listen to him, respect him, and take cues from him and his associates.

But DD didn’t reply to any of those issues. Instead he says:

- DD doesn’t personally know Andy.

- As far as DD knows, none of DD’s personal associates covertly DDoSed ET or covertly sent him something else.

- He’s not a chief (no one said he was).

DD hasn’t actually denied any of the claims at issue. But he’s written it to sound like he’s issuing a denial.

And even if Brett Hall (for example) had covertly sent harassment to ET, including a DDoS, DD still wouldn’t be saying anything wrong as long as Brett never told DD that (and DD didn’t find out some other way). DD spoke about what he’s aware of, not what actually happened nor what the best explanation for the evidence is. (Brett or another of DD’s associates has probably written some anonymous, negative blog comments on curi.us, which actually would be sending something (“anything else”) covertly to Elliot. That’s the best explanation but the evidence is circumstantial.)

This apparent straw manning should be explained. What’s going on? I have two explanations: ignorance or word lawyering (carefully using technically true but misleading wordings).

Ignorance?

Maybe DD doesn’t know what the issues are because he didn’t read the info he was sent. If he doesn’t know what the claims in the discussion are, it would explain why his replies didn’t address them.

But in that case, why did DD reply like he was answering the issue instead of saying “I’m busy and won’t read this”? He gave the impression he knew what the relevant claims were and was responding to them with relevant denials. It’d be irresponsible and misleading to write DD’s response if he was simply unfamiliar with the claims and evidence.

And if DD was unfamiliar with what’s going on, then he must have gotten lucky. If you make claims about an issue you aren’t familiar with, usually you’ll screw up and say something that’s clearly wrong or is contradicted by facts you don’t know about. DD doesn’t appear ignorant: he seems to have known what statements he could make without fear of being directly refuted by the published evidence.

DD also found out about DDoSing somewhere. The email DD was responding to hadn’t specified DDoSing, so DD must have read or been told something else.

Careful Wording?

Another interpretation is that DD knows what’s going on and carefully wrote misleading statements. He may be intentionally responding to the wrong issues in order to say technically true statements while still making his reply sound negative towards ET. It looks like he was trying to bias his comments against ET without saying something false. (Trying to disown the harassment while being biased in favor of it is kind of contradictory.)

It looks to me like he was hoping people wouldn’t notice the straw manning and rhetorical tricks. It looks designed so people would react like this: “DD denied everything and wouldn’t risk his reputation by making factually false statements regarding crimes, therefore ET is probably lying.”

Aliases

How does DD know that he doesn’t know Andy? Andy has used 20+ fake names (even his main name, “Andy”, is likely a fake name). DD could be in contact with one of Andy’s fake names without realizing it. Getting DD’s attention and having some association with DD under a fake name is just the sort of thing Andy would love and might try repeatedly with different names.

Did DD even review all publicly known aliases of Andy before declaring that he doesn’t know Andy? Did DD ask his associates who know Andy what other aliases they know about? (I doubt it, considering that DD doesn’t seem to mind when his friends publicly associate with Andy, despite Andy’s involvement in threatening, persistent harassment, and other uses of force. DD doesn’t seem to mind having Andy two steps away on DD’s social graph via multiple routes; DD hasn’t even blocked Andy on Twitter and many of DD’s friends who he follows on Twitter are following Andy.)

“Knowing” Someone

On 2011-03-13, in IMs with DD, I suggested he should try having more discussions with a smart friend of mine. He replied:

[oxfordphysicist] I can't recall her ever addressing me. I don't know her at all.

DD had sent her at least 12 private emails within the previous month before denying knowing her at all. For each of those emails, she was one of only four recipients.

She had started talking in the TCS community in 2003 and written dozens of emails. DD had publicly replied to her, and he generally read most TCS emails.

She’d come up repeatedly over the years, e.g. DD had given her advice two years earlier. It was memorable, high-stakes advice about a child custody court case.

In 2010, I told DD one of her philosophical theories and his response referred to her by name.

I got DD to IM with her in 2006 (I set up a three-person IM chat). In that chat, she did address him and he said “good luck [her first name]” at the end. That wasn’t their only conversation; it’s just the first one I found.

If DD doesn’t know Andy in the same sense that he didn’t know her at all – meaning he only emails Andy privately 12 times in a month and Andy is active in a discussion community he co-founded, reads and replies to – then he does know Andy.

DD seems willing to use poor memory as an excuse (he said he “can’t recall”, which may have been true). Note that he didn’t have any significant incentive to lie then, as he does with Andy.

If DD forgot all about her, his memory can’t be trusted. If he remembered her, his statements can’t be trusted.

Maybe DD doesn’t think his interactions with her count as “knowing” someone at all. If so, DD could know Andy equally well and think that somehow doesn’t count as “knowing” Andy.

Conclusion

It’d be bad if DD denied stuff about harassment while being ignorant of what he was denying. But the ignorance explanation doesn’t work well, so I think something even worse is going on. It looks to me like he was word lawyering to make it look like he was denying my claims while actually denying other issues that weren’t in dispute.

Why would DD respond to harassment of me with word lawyering? Perhaps because he wants the harassment to happen (note that DD has been asked to say he doesn’t want it to happen, but has refused to say that), wants to use words against me, and also wants to carefully avoid responsibility by not getting caught in an error. (But I did catch him lying in a different sentence.) It’s hard to come up with alternatives that make sense, and DD isn’t providing any, nor are his fans.

Jason Crawford Letter

(This is also posted on the Letter website, with some minor cuts to get under the 1500 word limit.)

I agree with lots of what you say. I too value progress. And I think educating people on the history of progress, and teaching some general understanding of how modern civilization works, is a good project. And I agree with organizing lots of info around what problems people were trying to solve and what their solutions were.

You invited debate on Letter, and I agree with that too. I think interest in debate is important. So I’ll share a criticism and see how it goes. It relates most to your article: How to end stagnation?

I agree that there is a stagnation problem. And I think what you’re doing is productive and useful. But I don’t think your approach addresses the most important problems. I think there’s a deeper issue which must be addressed. You don’t have to do that personally, but some people do.

Funding, government and cultural attitudes to progress are downstream of philosophy. The root cause of the problems in those areas that you discuss is bad philosophy. So bad philosophy must be addressed.

Which philosophy? Crucial topics include how to think rationally, how to find and correct errors, how to judge ideas effectively, how to rationally resolve disagreements between ideas, and how to create knowledge. Ideas about these issues affect how people deal with ~all other topics. If people get it wrong, their thinking about government, economics, new inventions, etc., can easily go wrong, stay wrong, and be counter-productive.

A causal chain is: epistemology -> philosophy of science -> scientific practice -> lab results -> [more steps] -> new products on the market. Errors earlier in the chain cause errors downstream. And rationality actually comes up in every step in that chain, not just at the root.

There’s a lot to discuss about why ideas are important, or what to do about them. One of the major problems, in my view, is that people aren’t very good at resolving disagreements. Most debates are inconclusive. There are lots of errors that some people already know are errors, but that corrective knowledge doesn’t spread well enough. Our society isn’t effective enough at correcting errors even after they’re discovered. So we need something like a better way to organize debates. (I think the issue is primarily about methodology and organization, not the specifics of the issues that people disagree about).

You’ve probably heard something similar before about how big a deal rationality is. Many people talk about critical thinking but don’t know how to do anything effective about it. There’s a disconnect between theory and practice. (I think that means there’s a major problem and that theory should be improved, not that we should give up.) So instead of just discussing principles, I wanted to bring up a concrete example – one of the practical results downstream of my philosophical ideas.

I chose this example because it’s indirectly connected to you, and I thought it might surprise and interest you. You wrote (my bold):

Tyler Cowen has argued that "our regulatory state is failing us" when it comes to covid response (see also his interview in The Atlantic). Alex Tabarrok says that FDA delays have created an "invisible graveyard", which covid has now made painfully visible.

In 1997, Tabarrok did something to harm progress. (I included Cowen in the quote because he’s closely associated with Tabarrok, so it’s relevant to him too.) He wrote a negative review attacking the book Capitalism: A Treatise on Economics by George Reisman (which I consider the best economics textbook, but which is not very popular).

What happened? Was it a disagreement about an economic issue which they’ve been unable to resolve in the last 24 years? That’d be bad. Was it related to one of the schisms which prevent the Austrians from being very unified? If they can’t agree among themselves, how can they expect to persuade others? Inability to resolve internal disagreements is an ongoing problem plaguing many communities that have valuable ideas, but I don’t think it’s the main issue here.

(FYI, in the 90s, Tabarrok wrote four articles in Austrian journals, one of which he called “My most Rothbardian paper.” The blog title “Marginal Revolution” is an Austrian theme and Cowen worked as managing editor of the Austrian Economics Newsletter.)

Details are in my Refutation of Tabarrok’s Criticism of Reisman, which links to the original articles. There are multiple serious issues, some of which Reisman covered in his rebuttal. The most glaring issue is Tabarrok’s position that Capitalism “has surprisingly little to say on entrepreneurship”. After Reisman refuted this, Tabarrok repeated it again anyway.

Here are entries in Capitalism’s table of contents which show that Reisman did cover entrepreneurship (I’ve read the book and judged the matter that way too; this is just a quick indication):

- The Benefit from Geniuses

- The General Benefit from Reducing Taxes on the "Rich"

- The Pyramid-of-Ability Principle

- Productive Activity and Moneymaking

- The Productive Role Of Businessmen And Capitalists

- 1. The Productive Functions of Businessmen and Capitalists

- Creation of Division of Labor

- Coordination of the Division of Labor

- Improvements in the Efficiency of the Division of Labor

- 2. The Productive Role of Financial Markets and Financial Institutions

- 3. The Productive Role of Retailing and Wholesaling

- 4. The Productive Role of Advertising

- 1. The Productive Functions of Businessmen and Capitalists

- Smith's Failure to See the Productive Role of Businessmen and Capitalists and of the Private Ownership of Land

- A Rebuttal to Smith and Marx Based on Classical Economics: Profits, Not Wages, as the Original and Primary Form of Income

- Further Rebuttal: Profits Attributable to the Labor of Businessmen and Capitalists Despite Their Variation With the Size of the Capital Invested

- The "Macroeconomic" Dependence of the Consumers on Business

I’ve seen this kind of thing before, e.g. a person argued with me rather persistently that David Deutsch’s book The Fabric of Reality doesn’t discuss solipsism (which is actually such a main theme that it has many sub-headings in the index). But that person wasn’t any sort of respected intellectual leader. Reisman’s book has over nine column inches related to entrepreneurship in the index. I know the number of inches because Reisman told Tabarrok in print. Tabarrok then ignored that and claimed again that Reisman had little to say about entrepreneurship.

I don’t think Tabarrok’s review was in good faith. (To connect this to earlier comments: his thinking methodology wasn’t rational.) I’ve tried very hard to be charitable, but I’m unable to find any viable interpretation where it was written in good faith. So I suspect Tabarrok is a social climber posing as an intellectual. A major cause of the problem is the philosophical errors (inadequate critical thinking and rational analysis) by the readers and fans who let people like Tabarrok get away with it and actually reward it. We need better error correction which is better able to sort out good ideas and thinkers (like Reisman) from bad ones (like Tabarrok, who is currently much more influential than Reisman).

A philosophical theory that my analysis relies on is that no data or arguments should ever be arbitrarily ignored without explanation. It’s unacceptable to say “90% of the evidence and arguments favor X, therefore I’ll conclude that X is probably right”. Every criticism, discrepancy, contradiction or problem has a cause in reality which has an explanation. So I would disagree with the attitude that “It’s just two bad articles, so let’s treat it as random noise”. Some errors can be explained as noise, fluctuations or variance but some can’t. Before attributing something to random noise, one should have an explanation of what is causing that random noise (e.g. medical data has noise due to variations in people’s bodies; manufacturing has noise due to imprecision of materials and tools; science has noise due to imperfect measurement; polling or survey data has noise due to people giving lazy, careless or dishonest answers). Also, people can change their minds and improve; they’re not permanently guilty of past errors; but Tabarrok hasn’t retracted this, nor explained what caused the error and how he later fixed that problem.

I do think Tabarrok should be given another chance to explain himself. I’d be grateful for the opportunity to correct my thinking if I’m in error. What I think would dramatically improve world progress (regardless of the truth of this specific case) is more people in his audience (and the audiences of every other intellectual) who would ask him to explain it – more people who question intellectual authority and expect intellectuals to respond rationally to critical arguments, and who know how to tell the difference between reasonable and unreasonable ideas. But without that – without audiences that know how to tell the difference – projects like spreading reasonable ideas about the roots of progress, or writing a great economics textbook, are in a poor position to thrive.

I think that, in a world where the fate of the careers of Tabarrok and Reisman matched their merit, progress wouldn’t be stagnating. But we live in a world where good ideas don’t rise to the top very well. And the problem is related to philosophy: the methods people use to debate, review, judge and spread ideas.

Update 2021-05-08:

I emailed Crawford the following:

Why haven’t you responded to my Letter? https://letter.wiki/conversation/1140

You invited debate. I believe you’ll agree with me that, if the claims in my letter are true, then it’d be worth your time. So not replying seems like you think I’m mistaken about something important but are unwilling to argue your case.

Crawford emailed back to say that he is never going to reply because I'm "quick to make personal attacks" (that comment is a personal attack against me). I take it that he refuses to consider that a public intellectual could be a social climber (at least one that he likes?), and that promoting people who take dishonest actions to harm other people's careers could be bad for progress. My claims were argued using analysis of published writing; Crawford gave no rebuttal and instead made an unargued attack on me. If Crawford is wrong, he's going to stay wrong, because he's blocking any means of error correction.

Also, it's bizarre that Crawford calls my commentary on issues from over 20 years ago "quick". My Letter comes over a year after my previous article on Tabarrok. I'm not rushing.

New Community Site Planning Update

For new community stuff, my current plan is to have:

- Critical Fallibilism website with curated articles (likely jekyll, wordpress or ghost)

- Critical Fallibilism forum, publicly viewable, $20 for an account that can post (Discourse is the leading candidate)

- Critical Fallibilism youtube channel

- maybe a new email newsletter setup (or have a way to get email notifications about new articles). or maybe keep using current email newsletter or kill it.

Currently planning to name it Critical Fallibilism b/c that sounds like the name of a philosophy. It has downsides (particularly it could sound too sophisticated and intimidate people). I considered some other names but I think an "ism" that sounds like a philosophy is better overall than something like "Learn, Judge, Act" or "Decisive Arguments" cuz ppl won't immediately know what that is. Those don't really work as a brand name either.

Plan is other stuff goes mostly inactive, e.g. Discord, FI google group and curi.us. I think people misuse chatrooms to try to say stuff that should be on a forum, so I'm inclined to just not have a chatroom in order to better focus all discussion in one place.

Taking suggestions on what website software to use and taking offers of help.

If making suggestions, FYI one of the main requirements for stuff is markdown support.

The purpose of paywalling forum posting is to increase quality and keep out harassers, not to make meaningful amounts of money.

I want somewhere good to discuss long term with good features. I think custom software is too much work and isn't going to happen. (Some coding help offers fell through. I don't want to spend the time to code a lot of features myself. I could code something simple like curi.us myself but I think getting modern features is a better plan.)

I plan to have different subforums. Current concept is something like:

- Unbounded Critical Discussion

- Main

- Debate

- Other

- Casual, Gentle Learning

- Main

- Technical Details

- Other

- Elliot's articles

- Community

That's 2 main areas with 3 subforums in each, and then 2 additional subforums.

"Casual, Gentle Learning" name is to be decided (suggestions welcome). The point is to have a section for more criticism and analysis of what ppl say and do (e.g. social dynamics, memes and dishonesty that they do), where you can't control critical tangents, discussions don't get ignored or forgotten after some time passes. etc. And a section for ppl (or specific topics) who don't want to deal with that and want to just arbitrarily, casually drop discussions, ignore relevant discussion continuations, ask a question and never follow up, not do Paths Forward, act socially normally etc.

A different way to view the distinction is a section limited to socially normal criticism and a section for rational criticism that could seem overly rude or aggressive to ppl. And persistence and criticism of things seen as tangential or irrelevant to the original topic are two of the main ways that comes up. In Gentle, if ppl wanna drop an issue they can just drop it. In Unbounded, you can't just drop a discussion and make a new topic about something else. If you post a new thread, ppl might respond about the pattern of not finishing discussions then creating new threads, but in Gentle they won't do that.

Unbounded is the section where discussions can involve reading a book and then coming back and continuing. It's where people might actually do whatever is effective to seek the truth without putting any arbitrary limits on it.

Part of the point – which I know ppl don't want – is to label who is actually presenting serious ideas in the public square for consideration as the best existing ideas, and who is not making serious claims meant to contribute to human knowledge. People want ambiguity about how good or serious their posts are.

Anyway I'll try to come up with a reasonably tactful but also reasonably clear way to explain the distinction for the forum.

"Technical Details" is meant for stuff that isn't of general interest or isn't accessible to everyone, e.g. posts involving coding or math (that way Main only has stuff for everyone). I'm not sure if having that subforum exist is necessary/worthwhile or not. I don't have that separation in the unbounded forum b/c topics there have no boundaries on what could be included – in other words, whatever topic you bring up, you can't know in advance that replies won't use math.

The "Other" sections will allow off-topic discussion, including politics, food, music and gaming. Main will allow a lot of topics but not everything. You could post about food, music or gaming in Main if your post had explicit philosophical analysis and learning stuff, so it was really obviously relevant to a rationality forum. But if you wanna talk about those things at all normally just put it in Other. I think abstract political philosophy or economics would be OK in Main but no discussions about current political news or events – those have to go in Other (or Debate).

I plan to post less in the Casual section than the Unbounded section. I want to have somewhere I can do share my full critical analysis of stuff. I plan to restrict that criticism to:

- public figures

- publications (books, articles, serious blogs)

- public examples (reddit threads, tweets, casual blogs). i could omit the name and link cuz i don't wanna get them any negative attention but i like sources, context and giving credit, so undecided on the best way to handle this. (suggestions?)

- stuff posted in the Unbounded section

And I also plan to check with people who are new to that section that they know what they're consenting to and let them back out and be like "nevermind I'll go use the gentle section". I think just "this person posted in this section" isn't enough for nubs and they should be asked too before getting full crit. I'll also have a general recommendation written somewhere that new posters who aren't familiar with the community should use the Gentle section for at least a month.

One awkwardness is people might consent to receive criticism but then want to back out after receiving some criticism, but I don't want to delete analysis that's already written, nor do I want to stop analyzing something if I started posting analysis and thinking it through and still have more to say, nor do I want to stop other people from taking an interest, responding to my analysis, starting their own analysis, etc. after critical analysis has begun. Thoughts on how to handle that? (Note: I hope to disable deleting posts and/or save version history.) Maybe once there is an example of what ppl don't like, we can make ppl say they've read it and are OK with it b4 they can post in Unbounded.

Hopefully the casual/gentle section will provide most of what people wanted from a chatroom while being way better organized.

FI Basecamp Update

I removed some inactive people from the FI Basecamp in order to revert it to the free plan. Sorry; it’s nothing personal.

There were a few reasons:

I realized I cannot make the Basecamp large, given the ongoing harassment against my community (including vandalizing the Basecamp once), because Basecamp doesn’t have adequate security features. It’s designed for working with trusted co-workers.

People weren’t using the project management features.

curi.us is a better discussion forum IMO. It has publicly-viewable permalinks and some markdown support. If you want to have a discussion, please use that.

I decided it'd be better to restrict it now, rather than have more people join then restrict it later.

You can download an archive of all the content at:

https://curi.us/files/fi-basecamp-2021-04-04.zip

I’ll share an updated archive in the future with new posts so that everyone can read them.

I'm planning a new, better forum, although currently I'm making videos explaining The Beginning of Infinity. For updates on my new stuff, subscribe to my newsletter:

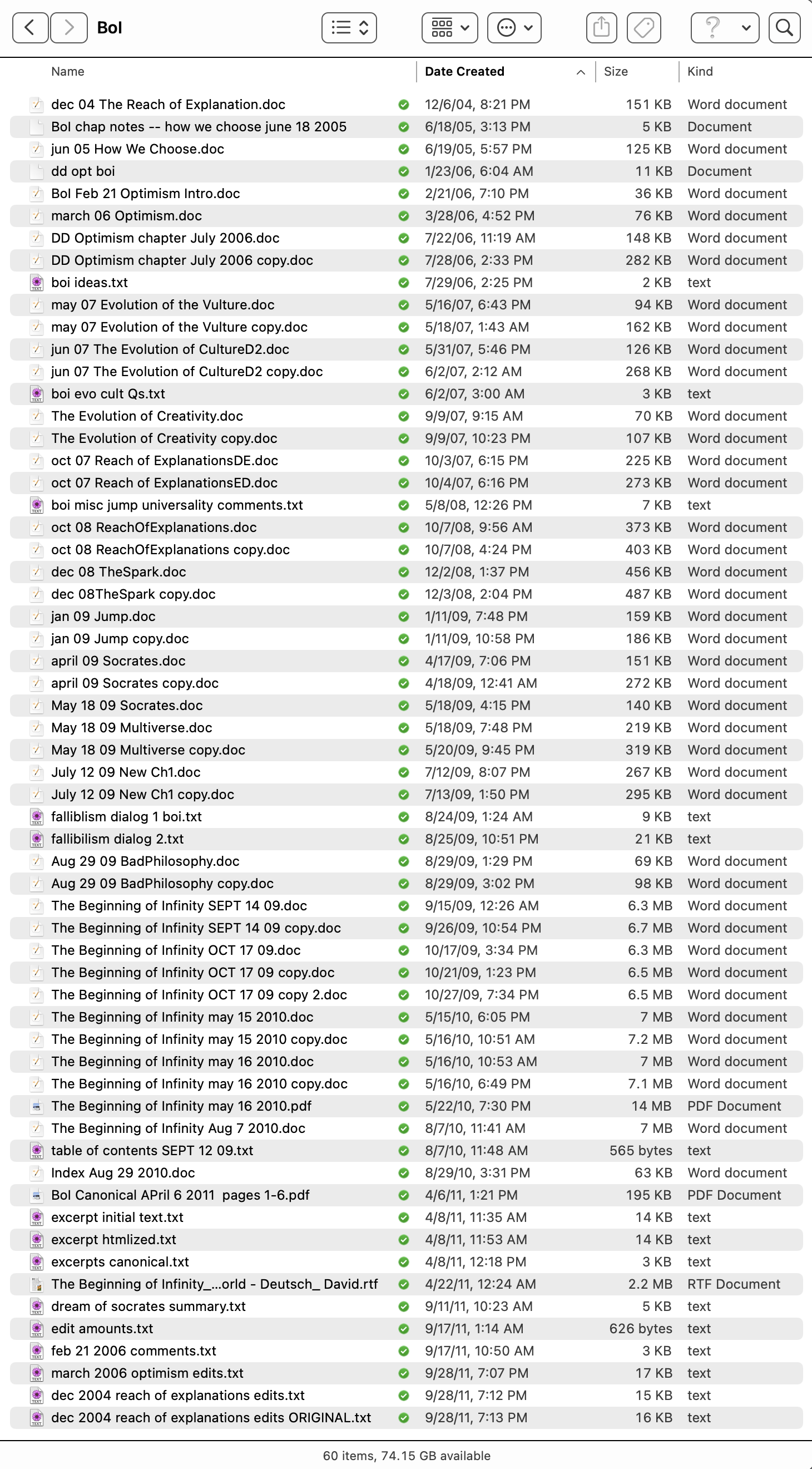

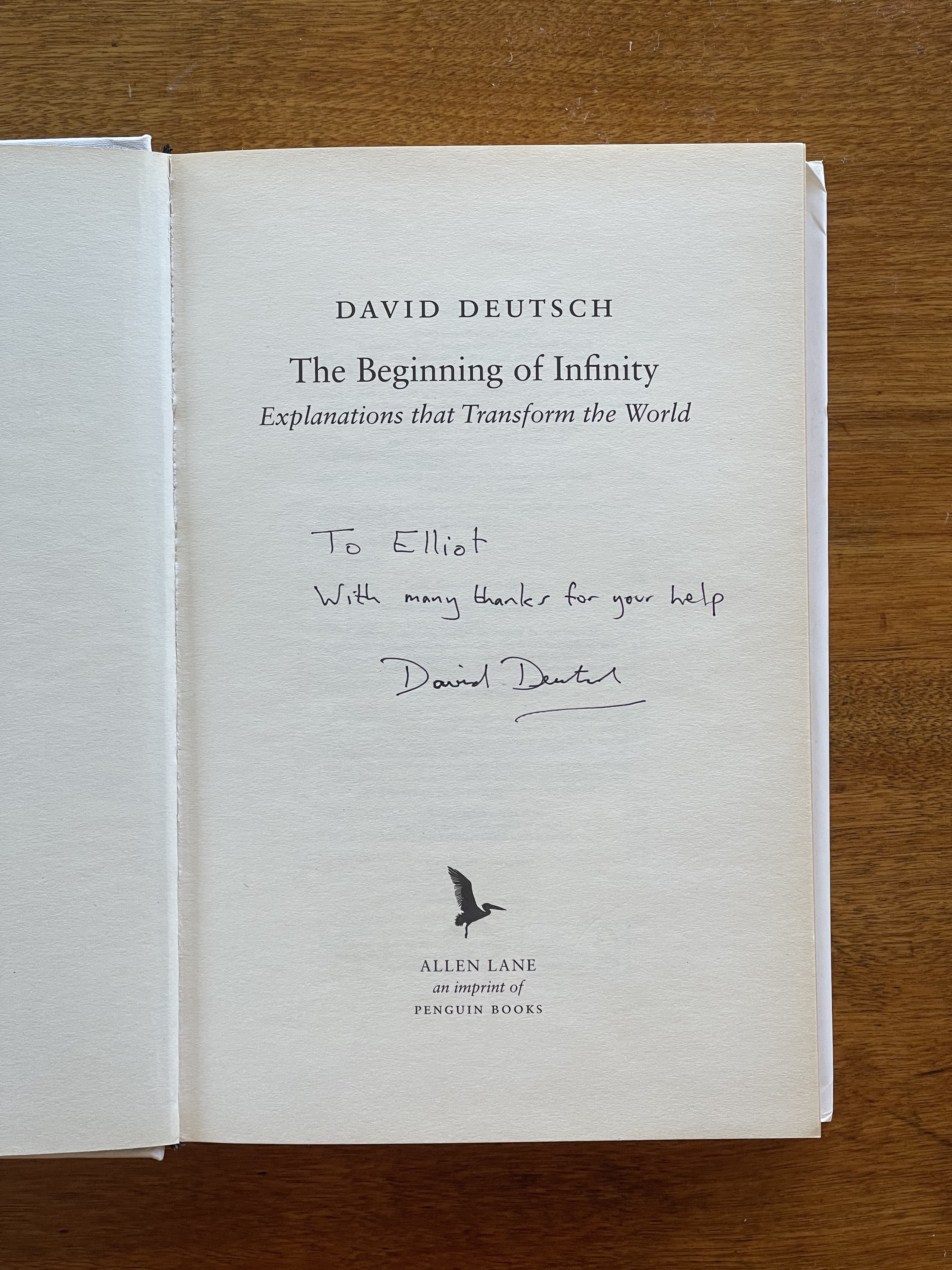

Was David Deutsch Using Me to Help with BoI?

I helped David Deutsch with his book, The Beginning of Infinity, for seven years. Soon after the book was done, he dropped me, and he's now hostile enough to personally take a leading role on harassing me by lying about me in writing.

It has just now occured to me that he may have been using me to get help with his book. Now I'm unsure. I had never thought of this until a couple days ago. But academics getting younger people to do some work for them, which they can take credit for, is a common story. BoI wasn't like getting a younger co-author for a paper who you can actually get to do most of the writing. DD absolutely wrote BoI himself. But I did help a really unusually large amount, as DD requested. I wrote over 200 pages to help DD with BoI, which is an entire book worth of writing. And no one else helped similarly (nor could they have – DD wanted some of my unique skills, abilities, knowledge and perspective).

Part of why this occured to me is that DD got Chiara Marletto to co-author some Constructor Theory writing with him (and also to write multiple other papers, also about DD's ideas, without DD). He's getting a younger postdoctoral researcher to do a lot of work that he isn't doing himself. And when you see two people with significantly different social status as co-authors on a paper, in general that means the lower status person did most of the work. If the higher status person had done most of the work, he wouldn't have given anyone co-author credit. You see this all the time with professors taking too much credit for the work of their grad students (either the grad student helps and gets little credit, or the grad student does most of it and gets his or her name on it and everyone assumes the professor did most of the smart stuff and guided the work, which is often inaccurate).

Note: People often use others without having full conscious knowledge of what they're doing. I think that's much more likely than DD doing using me while having clear, conscious knowledge of exactly what he was doing.

I learned a lot from interacting with DD. But I also had a reasonable expectation of more help from him in the future which never materialized. And my expectations were not just my own reasonable assumptions/guesses; for example, DD told me that one day he would write a forward for my book.

Here's some info about what I did for BoI:

For more info on how I helped with BoI, see also the first few minutes of this video and this praise DD said about me.

Induction and AZ Vax Blood Clots

waneagony asks on my YouTube video Induction | Analyzing The Beginning of Infinity, part 5:

Could you explain some using the Astra Zeneca COVID vaccine as an example of induction being used (or not) and it being wrong (if so)?

Like more cases of blood clots seem to have been found among ppl that have taken the AZ vaccine.

I think comparing to see if there is an over representation of clots if one suspects reported cases to be high is ok. How one compares in a good way is an issue I think. Don’t know how to think about this well to avoid problems (don’t know if science is good at these things). I guess statistical significance is usually used here in science.

Claiming that clots are due to vaccine without an explanation is induction I think. ~pattern finding. There could be many other patterns that develop post vaccine that are highly (e.g. statistically significant) different between these groups too.

I’m not sure how, but I feel confused on this issue. I think we can discover that something bad is more prevalent post the vaccine in a legit way in the group that has taken the vaccine and I think it is valid to take precautions. But how do we know it’s because of the vaccine and not some other reason like just random or whatever? Could you explain a good way of thinking about this issue? Here, on the other yt channel, a post, a podcast, other, whichever would be appreciated.

First you need explanations of what’s going on. Here’s a simplified explanatory model (scientists have better ones):

- Blood has clotting agents to protect against losing too much blood from cuts

- When foreign materials are in the blood, they may incorrectly trigger internal clotting, which can do significant harm by blocking veins or arteries

- Some foreign materials cause clots and some don’t

- We know some materials are safe, some dangerous, and others unknown

- AZ vax is in the unknown category and we’re trying to figure out if it causes clots or not

Now imagine some simplified evidence: the blood clot rate with AZ vax goes from 0.01% to 75%.

Our guesses include “AZ vax causes clotting” and “AZ vax doesn’t cause clotting”. We need to try to criticize them.

Why did clotting jump to 75% if AZ vax doesn’t cause clotting? This argument criticizes the “doesn’t cause clotting” guess unless someone can provide some kind of rebuttal.

What if clotting jumped from 0.01% base rate to 1%? Might that be random bad luck? What if it was 5%? 10%? To answer that we need to know some things like the variance for blood clotting in general and the sample size of the trial. And we need to do some math. Statisticians have some ideas about how to do that math. We then take the math and use it in our critical arguments. E.g. “According to standard stats, a 1% clot rate in a trial of 50 people could easily just be bad luck. Therefore, I won’t reject the guess that the AZ vax is safe, unless you have some further criticism.” (My numbers are just made up btw. Not especially realistic.) Instead of “standard stats” you might name a particular model or theory in stats that you’re using, and there might be alternatives that get different results, in which case you’d have to use critical arguments regarding what model should be used or how multiple models should be used (e.g. you might be able to explain why a stats model is bad in general, or doesn’t fit this scenario well).

But if it’s 10% blood clots in the trial, then you say “According to standard stats, there’s only a 0.000001% chance that those blood clots would happen by random bad luck. So we should regard the AZ vax as dangerous.” That argument would be open to criticism and counter-argument. A critic could dispute any of the numbers used (e.g. maybe the measurements of the rate of blood clots in the general population were done incorrectly or are old and contradicted by more recent data and may have changed over time), or dispute the statistical model itself, or dispute some premise/requirement that’s required for the statistical model to apply, or dispute the math calculations and point out an error, or dispute the original explanatory model about how blood clots work, or could propose some other cause of the blood clots besides the AZ vax which could be solved in some way (e.g.: “everyone in the trial was in a room with Dr. Johnson who kept coughing up blood, and I’ve tested his blood and found it has Bozark’s Disease which can easily be passed on in an airborne manner in tiny quantities and then cause blood clots in others. so we should do another trial with a step where we screen everyone for Bozark’s Disease and I’m expecting we’ll see no increase in blood clots compared to the general population”).

PS I did not look up anything about the AZ vax issue for writing the above and was just trying to speak about general principles. My loose impression from Twitter is that the AZ vax is probably safe and that the regulators focus on “risk we allow it and it hurts people” without comparing to or caring about “risk we delay it and the vax delay hurts people”. They want to make sure it’s super safe, which is a bad idea when delaying it is uncontroversially super unsafe.

PPS Scientists knowing more details makes a big difference (here’s an overview, which is not representative of all the technical details scientists know). Like they can consider which materials are in the AZ vax and whether some of those have already been tested previously. The vax has a virus with some DNA in it. Maybe that virus has already been used for other stuff before and we’re confident it wouldn’t cause blood clots. So then we’d have to consider if the DNA is somehow getting out of the virus within the blood stream, or what happens when a white blood cell surrounds the virus and what’s left over or excreted from that process. And we’d want to consider what happens once the virus gets in the cell, whether the DNA could end up in the blood stream, whether the mrna created by the cell due to the DNA instructions could end up in the blood stream, whether something that’s within a cell but not directly in the blood stream can cause clotting just by changing the shape or surface of the cell, etc. And scientists already know a lot about those things, and understand explanations about how they work, and also know which types of materials generally do and don’t cause blood clots, and why, and what specific mechanisms form blood clots (like what chemical reactions happen, what different things in the body are involved, etc.) Often this explanatory knowledge and mental model of how this stuff works gives you a pretty good idea that it won’t cause blood clots – or in the alternative that it might and we need to carefully watch out for clotting – before you have any data at all. Maybe you can imagine how knowing a ton of detail about the stuff in this para would be 1) not data 2) very useful (possibly more useful than having a bunch of data. like imagine you could pick one: look at a bunch of data and stats about the AZ vax trials, or actually know all the kinds of stuff from this paragraph in tons of detail so you know how everything works. i think the second one would be better. the data without really knowing what i’m talking about would be less useful than knowing what i’m talking about. (i know what i’m talking about re the epistemology but not so much re the biology and medicine stuff. ideally a person would know epistemology and biology/medicine and also some stats and would have the full data. then they could judge better.))